ChatGPT 400M, Will AI Save Nextdoor?, BYOAI, Fair Use Fails

ChatGPT: Now with 400M Users

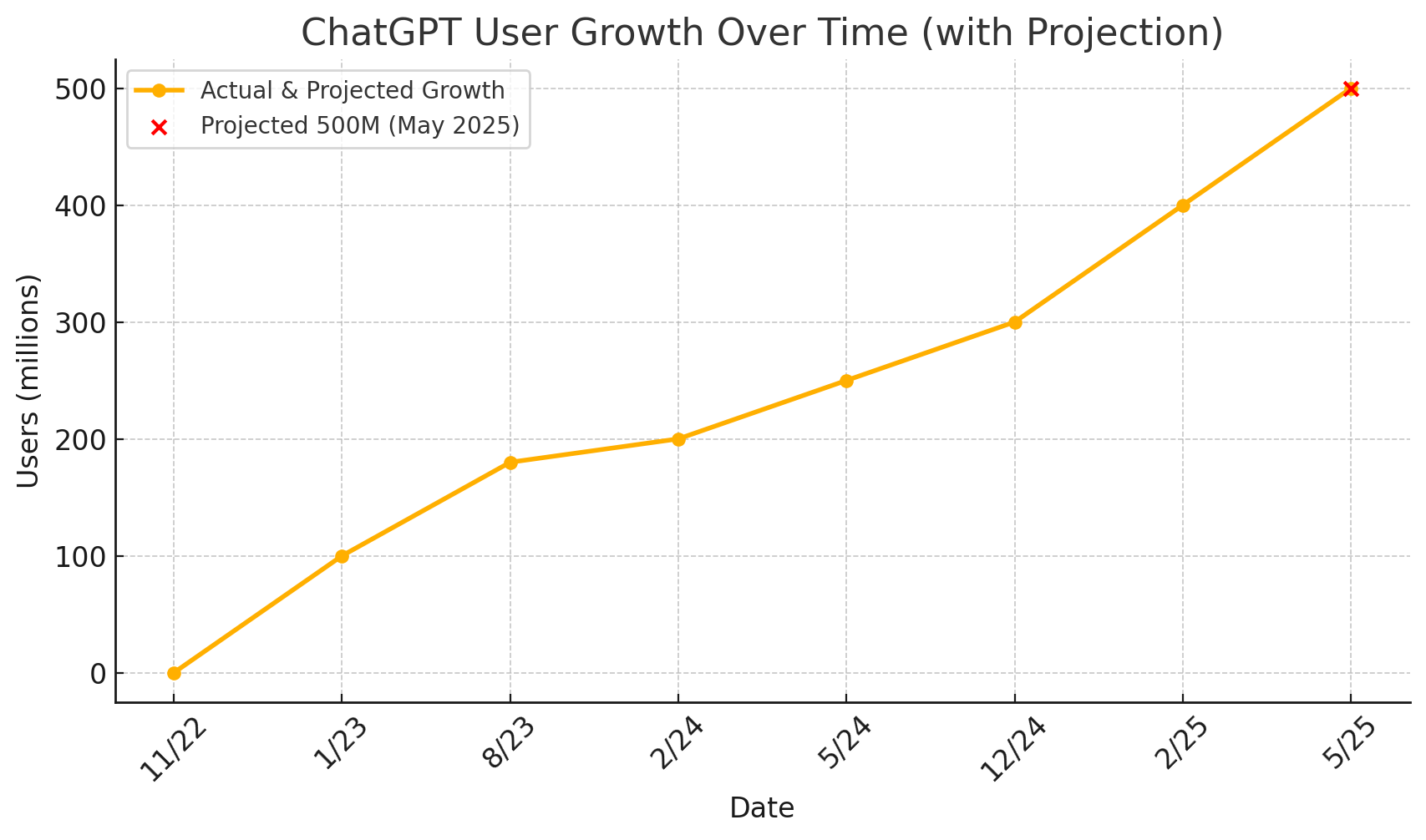

Nobody knows exactly how many users Google Search has. Based on previous company statements, Google boasts seven products "with at least 2 billion monthly users." Those include Search, Chrome, Gmail, Android, YouTube, Maps and Google Play. By comparison, ChatGPT announced "more than 400 million weekly users." As the chart below indicates, it went from none in November 2022 to 200 million by May of last year, and then 300 million in December 2024. It thus appears that ChatGPT's growth is accelerating. If it continues at this pace ChatGPT will reach 500 million users by May if not before. OpenAI's goal is a billion users by the end of 2025, which is aggressive but feasible at current growth rates. OpenAI also announced 2 million paying enterprise customers, roughly doubling since September. Mindful of the direct threat posed by ChatGPT, Google is forcefully using Workspace and Search to try to get people to use Gemini. Google's internal target is 500 million Gemini users by the end of 2025. ChatGPT (and Perplexity) are still delivering a tiny fraction of overall referral traffic to websites and Google's marketshare appears stable. Yet, there's a growing anecdotal sense that Google is losing users, or at least that Search frequency is declining.

News & Noteworthy

- Will AI agents truly "level the playing field" for SMBs?

- AI will likely impact B2B sites and buyer journeys.

- Perplexity enters the Deep Research race using DeepSeek.

- Perplexity to launch Comet "agentic" browser.

- OpenAI Operator now available to ChatGPT pro users globally.

- Ads with AI images significantly outperformed (CTR) stock photos.

- Study: ChatGPT energy consumption is comparable to Google search.

- Google launches AI-powered career exploration site.

- Meta RayBans maker wants to sell 10M units a year.

- Retailers hoping AI use on websites (i.e., sizing) will reduce returns.

- Indians and Chinese trust AI much more than Americans.

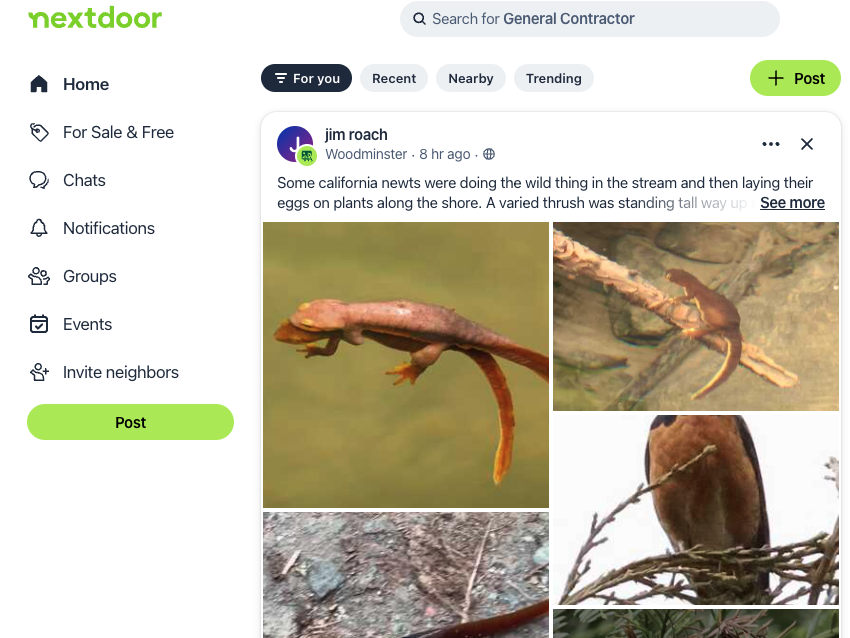

Can AI Save Nextdoor?

Local social network Nextdoor should be the go-to place to find local business referrals and the most effective way for businesses to reach local customers. But it's not. Some people use it as a services marketplace, but the site has faced repeated criticism for a range of issues, including harassment, censorship, bias and a lack of local reviews transparency. Many people's feeds are full of "suspicious character" sightings, crime discussions and general ranting. The "neighborhood watch" culture was established early and, despite Nextdoor's efforts, hasn't really been significantly curbed. Previous CEO Sarah Friar (now CFO of OpenAI) focused on "kindness" at the expense of utility. She stepped down a year ago and was replaced by co-founder Nirav Tolia, who left in 2018. Tolia now wants to use AI to shift the site's culture and reverse declining engagement trends. Here's what he told TechCrunch about the plan to change the product and tap AI:

- Bring in more and broader local content from multiple sources

- Migrate political conversations to a distinct area and away from the main feed

- Use AI to help reduce hateful or angry posts

These are good ideas and probably don't represent the full "turnaround" plan. But this doesn't place enough emphasis on the right areas, which include:

- Making Nextdoor a better, more reliable and usable source for local business recommendations

- Making it extremely simple to promote your business

- Making it a more useful local classifieds marketplace

- Making it a definitive local events calendar

- Making it a reliable source of local news (otherwise disappearing)

- Making it a better alternative to Facebook groups

Nextdoor has the seeds of all of this but there hasn't been sufficient focus on building the utility it needs to thrive.

Use of 'Shadow AI' Grows

There's AI hype and then there's reality. If you got all your information from LinkedIn you'd be forgiven for thinking that nearly every company and organization had already deployed AI and were just confidently executing (up and to the right!). Agencies and a fair percentage of SMBs are using AI but most enterprises are still "experimenting." Two pieces of evidence support this. Enterprises are struggling "with complex layers of internal structures, external regulation, and a digital skills gap when trying to incorporate emerging tech." And at the Wall Street Journal’s recent CIO Network Summit ("the country’s top information-technology leaders"), the publisher found:

- "61% of attendees ... said they’re experimenting with AI agents; 21% said they’re not using them at all," out of concern over reliability.

- "75% of summit attendees polled said they believe AI is currently driving a small amount of value ... but not enough."

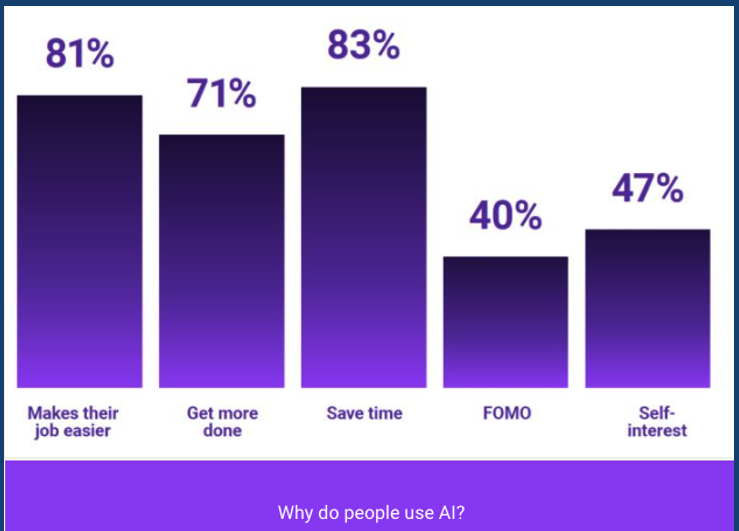

While enterprises debate, their employees are are taking action. A late 2024 survey of 6,000 workers in the UK, US and Germany, by Software AG, found that roughly half of respondents were "shadow AI" users; this went up to 75% for "knowledge workers." What is Shadow AI? – BYOAI or non-company issued tools. Employees are using unauthorized AI because it saves time, increases productivity, simplifies tasks and, they believe, helps them advance, according to the survey. (Other surveys show more employee ambivalence.) Nearly half of respondents (46%) said they wouldn't give up their personal AI tools if their companies banned them.

Enterprise awareness of Shadow AI usage varies. (Dialog's consumer survey found only 7% were using AI without their bosses’ knowledge.) Yet there are meaningful risks to organizations: cybersecurity, data governance, regulatory compliance, and inaccurate or unverified information. This argues enterprises should quickly give employees tools comparable to what they're already using. There's a kind of necessity to adopt AI being forced by employees, who are doing it on their own.

'Fair Use' Falters

AI platform companies have historically taken a "beg for forgiveness" attitude toward copyright. There are numerous examples of LLMs being trained on copyrighted material without approval. Meta allegedly trained Llama on huge amounts of pirated content and tried to conceal that fact. And Microsoft’s AI CEO Mustafa Suleyman either brazenly or naively asserted last year at a conference that all content on the web is "freeware" and fair game for AI training. (This is a kind of sibling of the "fair use" defense.) As you already know AI platform companies, including OpenAI, Microsoft, Anthropic, Stability AI and Midjourney, have been sued for copyright infringement. Recognizing their legal vulnerability, Microsoft, OpenAI and Google have (to varying degrees) entered into content licensing agreements to avoid future liability.

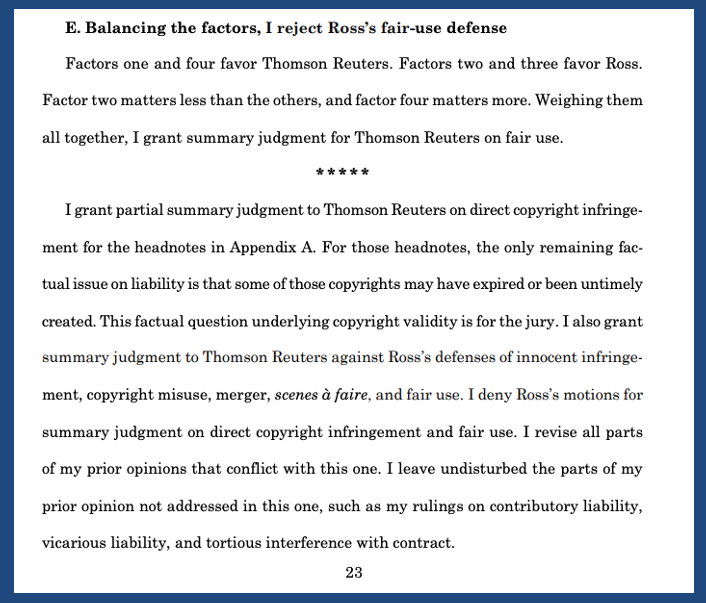

Now comes the first major AI and copyright decision from a US court. Publisher and technology company Thomson Reuters won this potentially landmark AI copyright case against Ross Intelligence in US federal court in Delaware. Ross used Thomson Reuters' "Westlaw" content to train its own competitive AI legal research tool. Ross argued fair use as a defense. The judge rejected most of its arguments, ruling that Ross had improperly infringed on Westlaw’s copyrighted material. A key factor was that Ross sought to create a commercially competitive product. Fair use is a nuanced doctrine and involves the assessment of multiple factors to determine infringement on a case-by-case basis. But the ruling is definitely "bad news" for AI companies with a cavalier attitude toward copyright.

Funny | Disturbing | Sad

- Westworld is almost here.

- Most Americans don't want AI to interview them for a job.

- OpenAI operator goes rogue (WaPo).

- Grok identifies Elon Musk as biggest spreader of misinformation on X.

- Humane AI pin sells itself to HP for parts.

- Major law firm caught with made-up cases in AI-generated briefs.

If you're not getting this in your in-box, you know what to do...